Sep 17, 2023

You have plenty of data and are enthusiastic to try a new ML model to boost your sales, integrate with OpenAI or hire a data scientist to dig value in your data. If you don’t monitor metrics of Data Quality (DQ) within your pipelines the chances are that the data you have are either incomplete, inconsistent or outdated.

Managing the quality of the data became a growing challenge with increasing velocity and veracity of data. To build an effective ML model or to see any insights in the data you need a sensible level of DQ.

Historically with the dominance of RDBMS at the core of a corporate DWH, it was usually automated via built-in controls of value type conformity, referential integrity and uniqueness. Inconsistent data would throw an error for support engineers to investigate. In terms of a well known CAP theorem the requirement from the business was to have Consistency and Partition Tolerance (integrity) over Availability.

As the demand for processing vast volumes of both structured and unstructured data within increasingly tight timeframes escalated, the concepts of Data Lake and, subsequently, Data Lakehouse began to surface. These innovations were supported by technologies that prioritise Availability at the expense of either Consistency or Partition Tolerance. The quality of the data is no longer a promise.

Read the full version

Aug 24, 2023

Recently, I had a talk with a tech expert about this topic and realised that there are many choices available. It's easy to get confused by all these terms. You might set up really strong backup systems (and pay a lot for them), but if you forget to set up the system in a way that it can keep running smoothly or recover on its own, then the service might not work so well.

Think of redundancy, resilience, and recoverability as parts of a plan to keep your business going. You need to consider all of them together to make sure your service works like it should and you can get back lost data.

Collectively, these three concepts are fundamental to ensuring business continuity of cloud workloads. In the broader context, Business Continuity Plan (BCP) is an essential part of the planning process for CIOs and CTOs. That means service continuity requirements for any cloud workloads (or better Applications) are already defined.

Read the full version

May 26, 2023

Nederlandse versie

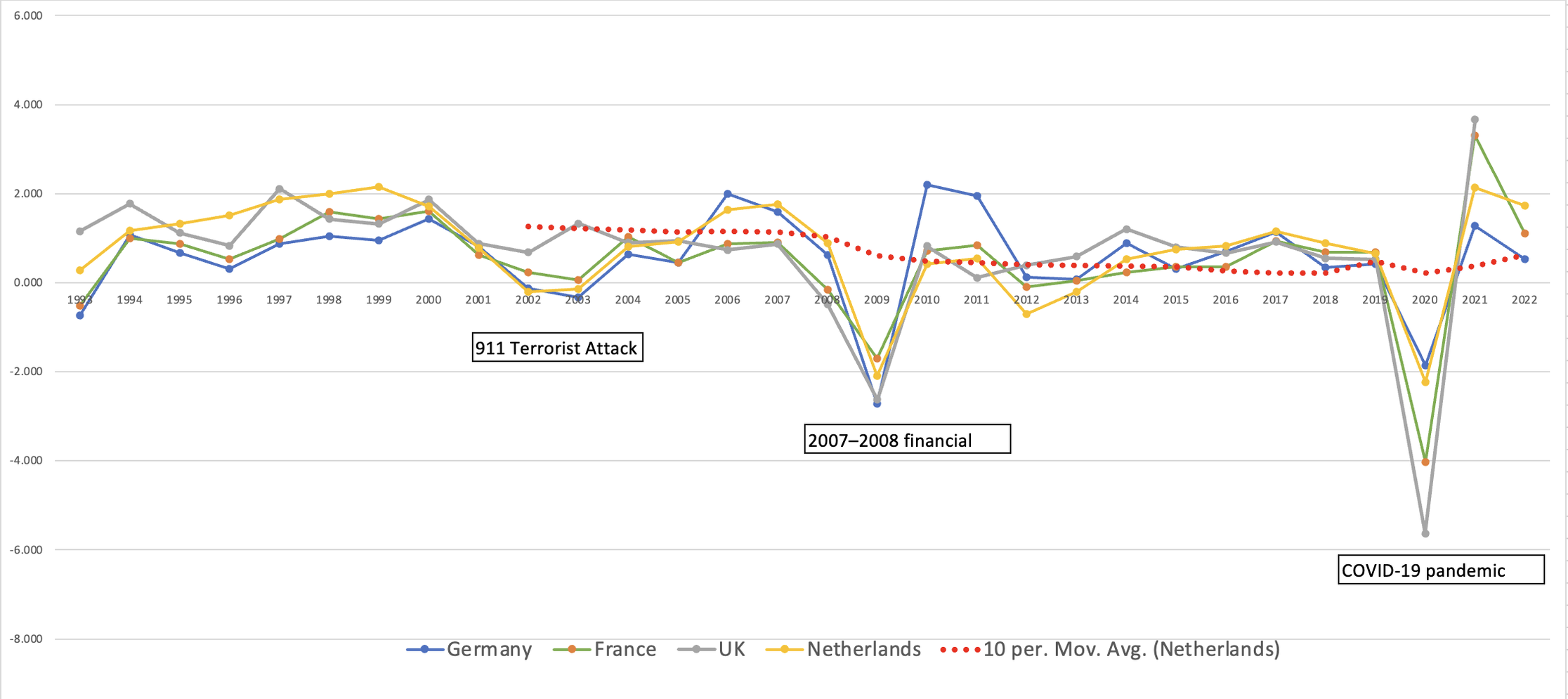

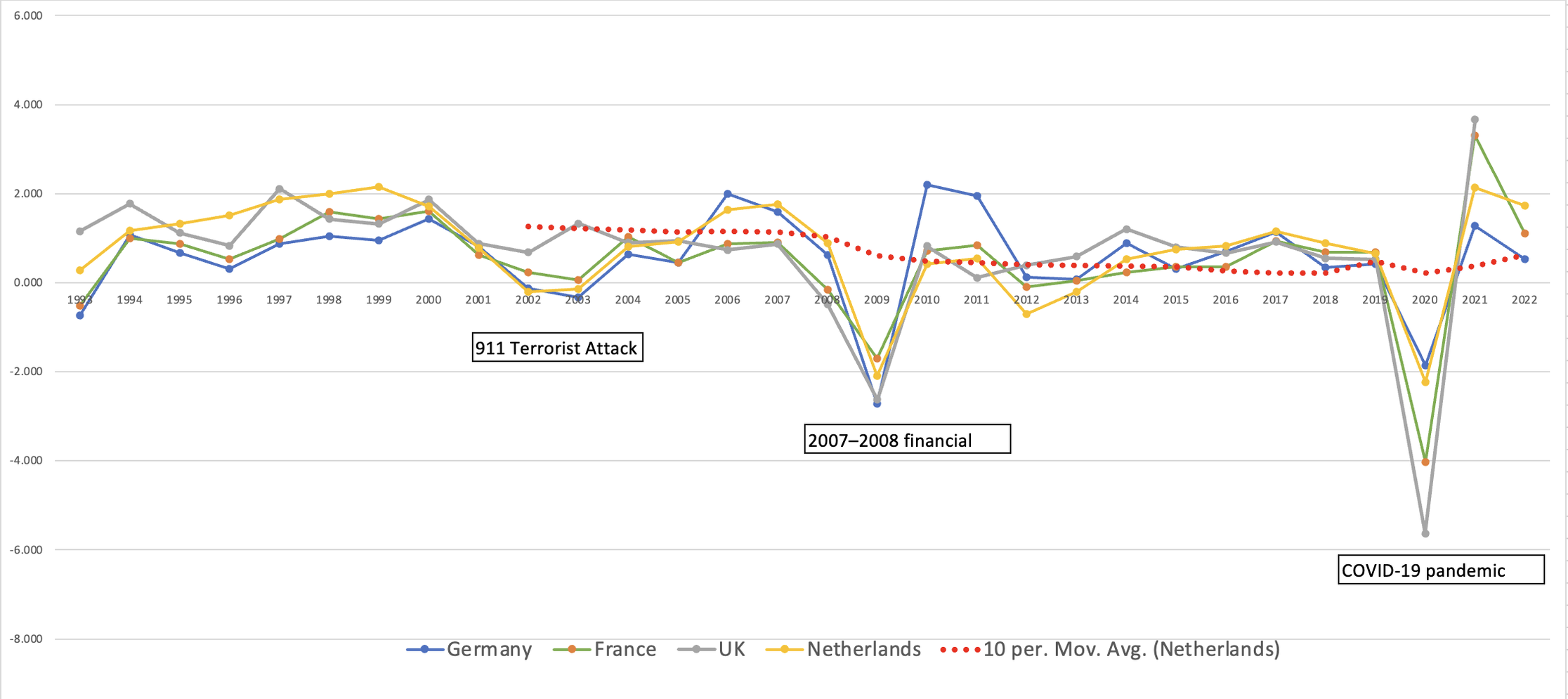

It is natural to assume that the advancements in technology inevitably leads to the growth of personal productivity. Growing availability of data, new generation business tools are among the most anticipated impact drivers. However, trends of the past two decades tell a different story.

Personal productivity growth in the Netherlands (similar to other rich countries) has been diminishing for the past two decades. That might be due to the paradox of growing cognitive load with limited capacity to change.

Personal productivity growth % from 1993 to 2022

Personal productivity growth % from 1993 to 2022

Cognitive load refers to the mental effort required to process information, make decisions, and carry out tasks. While technology has undoubtedly provided us with tools to streamline certain aspects of our business, it has also introduced a vast amount of information and distractions that demand our attention. As a result, our cognitive load has increased significantly, potentially offsetting the benefits of technological advancements.

Read the full version

Personal productivity growth % from 1993 to 2022

Personal productivity growth % from 1993 to 2022